State-of-the-art password guessing tools, such as HashCat and John the Ripper (JTR), enable users to check billions of passwords per second against password hashes. In addition to straightforward dictionary attacks, these tools can expand dictionaries using password generation rules. Although these rules perform well on current password datasets, creating new rules that are optimized for new datasets is a laborious task that requires specialized expertise.

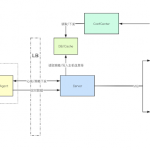

PassGAN, uses a novel technique that leverages Generative Adversarial Networks (GANs) to enhance password guessing. PassGAN generates password guesses by training a GAN on a list of leaked passwords. Because the output of the GAN is distributed closely to its training set, the password generated using PassGAN are likely to match passwords that have not been leaked yet. PassGAN represents a substantial improvement on rule-based password generation tools because it infers password distribution information autonomously from password data rather than via manual analysis. As a result, it can effortlessly take advantage of new password leaks to generate richer password distributions.

Our experiments show that this approach is very promising. When we evaluated PassGAN on two large password datasets, we were able to outperform JTR’s rules by a 2x factor, and we were competitive with HashCat’s rules – within a 2x factor. More importantly, when we combined the output of PassGAN with the output of HashCat, we were able to match 18%-24% more passwords than HashCat alone. This is remarkable because it shows that PassGAN can generate a considerable number of passwords that are out of reach for current tools.

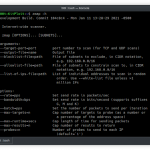

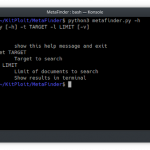

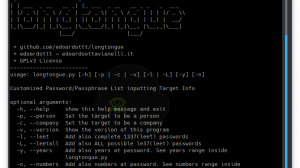

The model from PassGAN is taken from Improved Training of Wasserstein GANs and it is assumed that the authors of PassGAN used the improved_wgan_training tensorflow implementation in their work. For this reason, I have modified that reference implementation in this repository to make it easy to train (train.py) and sample (sample.py) from. This repo contributes:

- A command-line interface

- A pretrained PassGAN model trained on the RockYou dataset

Getting Started

# requires CUDA to be pre-installed pip install -r requirements.txt

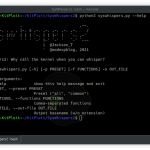

Generating password samples

Use the pretrained model to generate 1,000,000 passwords, saving them to gen_passwords.txt.

python sample.py \ --input-dir pretrained \ --checkpoint pretrained/checkpoints/195000.ckpt \ --output gen_passwords.txt \ --batch-size 1024 \ --num-samples 1000000

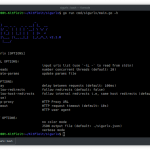

Training your own models

Training a model on a large dataset (100MB+) can take several hours on a GTX 1080.

# download the rockyou training data # contains 80% of the full rockyou passwords (with repeats) # that are 10 characters or less curl -L -o data/train.txt https://github.com/brannondorsey/PassGAN/releases/download/data/rockyou-train.txt # train for 200000 iterations, saving checkpoints every 5000 # uses the default hyperparameters from the paper python train.py --output-dir output --training-data data/train.txt

You are encouraged to train using your own password leaks and datasets. Some great places to find those include:

- LinkedIn leak (1.7GB compressed, direct download. Mirror from Hashes.org)

- Exploit.in torrent (10GB+, 800 million accounts. Infamous!)

- Hashes.org: Awesome shared password recovery site. Consider donating if you have the resources ????

Add Comment